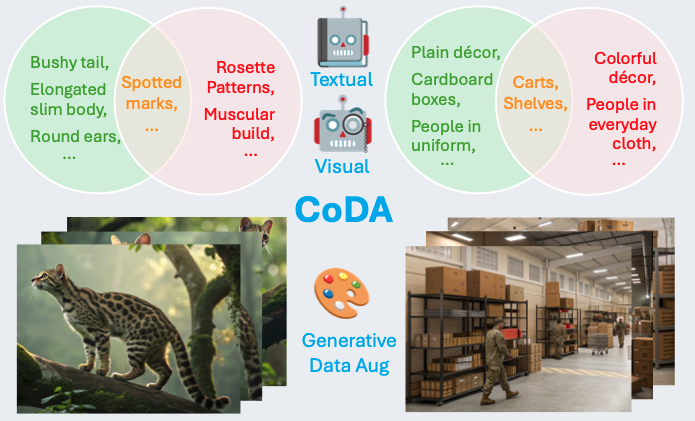

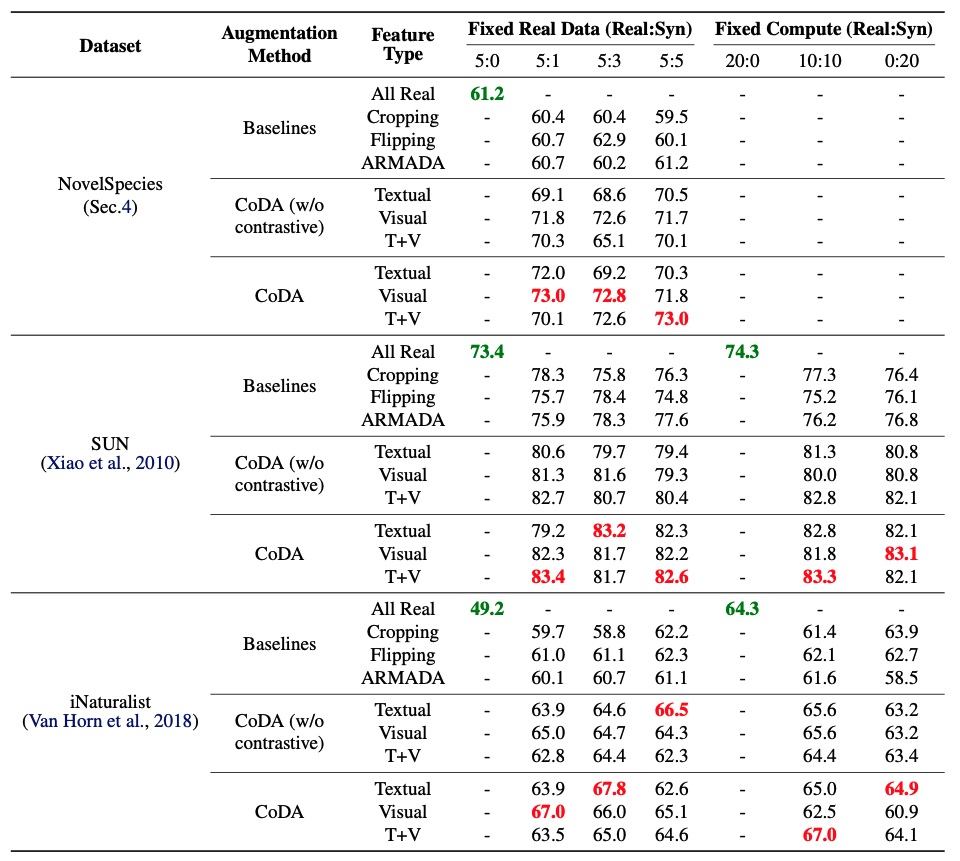

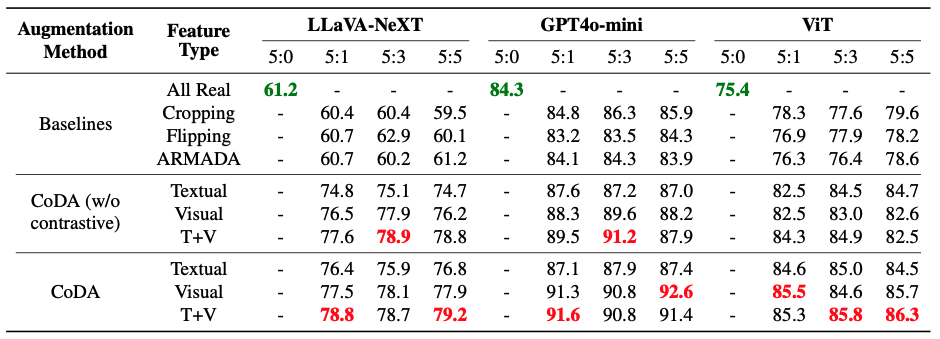

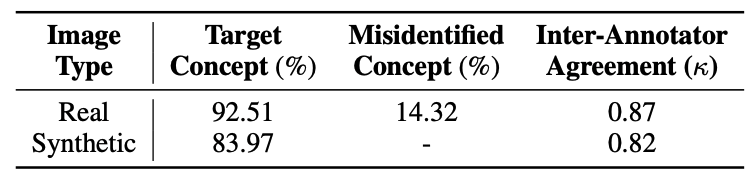

Large multimodal models (LMMs) often struggle to recognize novel concepts, as they rely on pre-trained knowledge and have limited ability to capture subtle visual details. Domain-specific knowledge gaps in training also make them prone to confusing visually similar, commonly misrepresented, or low-resource concepts. To help LMMs better align nuanced visual features with language, improving their ability to recognize and reason about novel or rare concepts, we propose a Contrastive visual Data Augmentation (CoDA) strategy. CoDA extracts key contrastive textual and visual features of target concepts against the known concepts they are misrecognized as, and then uses multimodal generative models to produce targeted synthetic data. Automatic filtering of extracted features and augmented images is implemented to guarantee their quality, as verified by human annotators. We show the effectiveness and efficiency of CoDA on low-resource concept and diverse scene recognition datasets including INaturalist and SUN. We additionally collect NovelSpecies, a benchmark dataset consisting of newly discovered animal species that are guaranteed to be unseen by LMMs. LLaVA-1.6 1-shot updating results on these three datasets show CoDA significantly improves SOTA visual data augmentation strategies by 12.3% (NovelSpecies), 5.1% (SUN), and 6.0% (iNat) absolute gains in accuracy

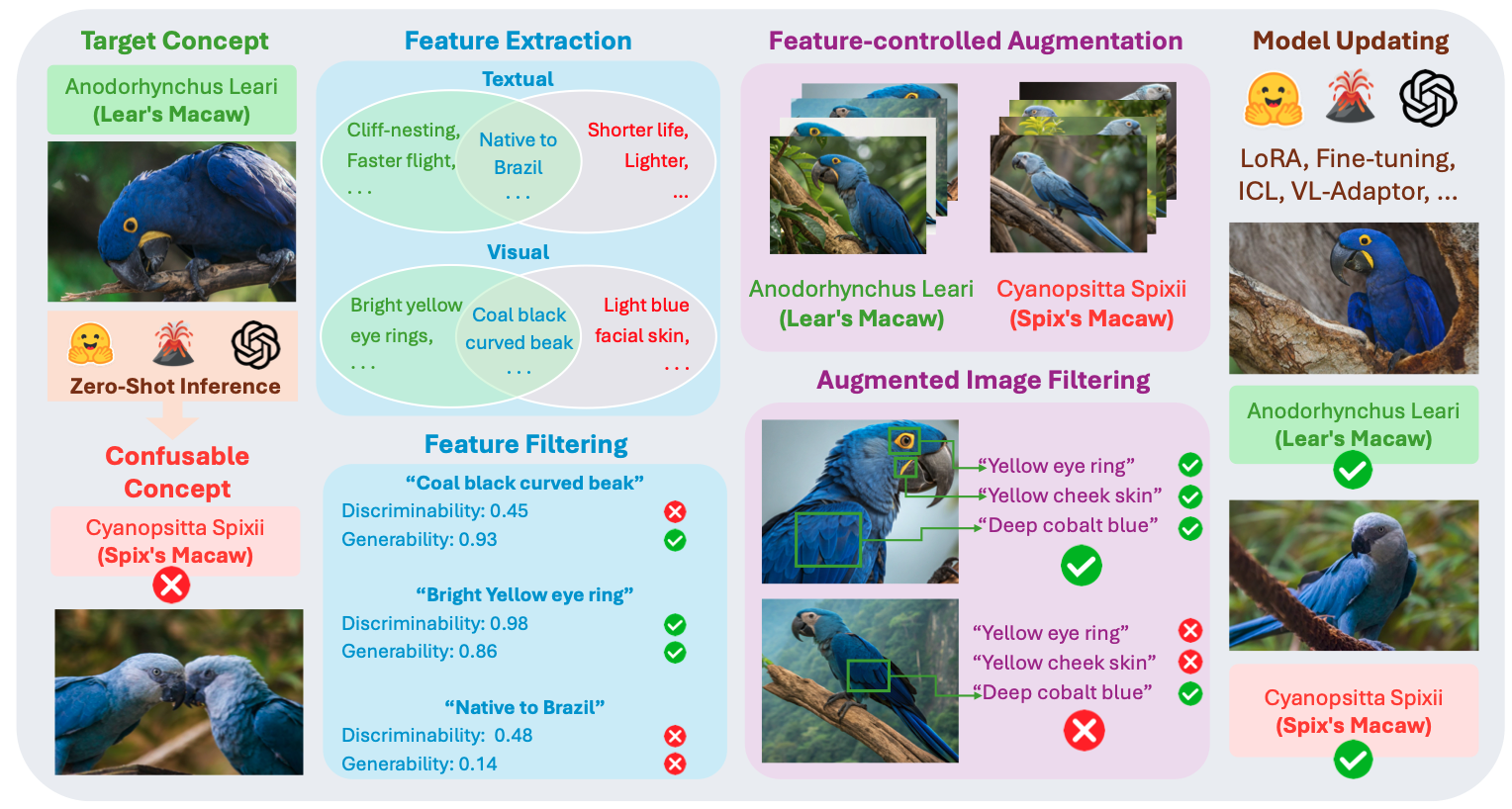

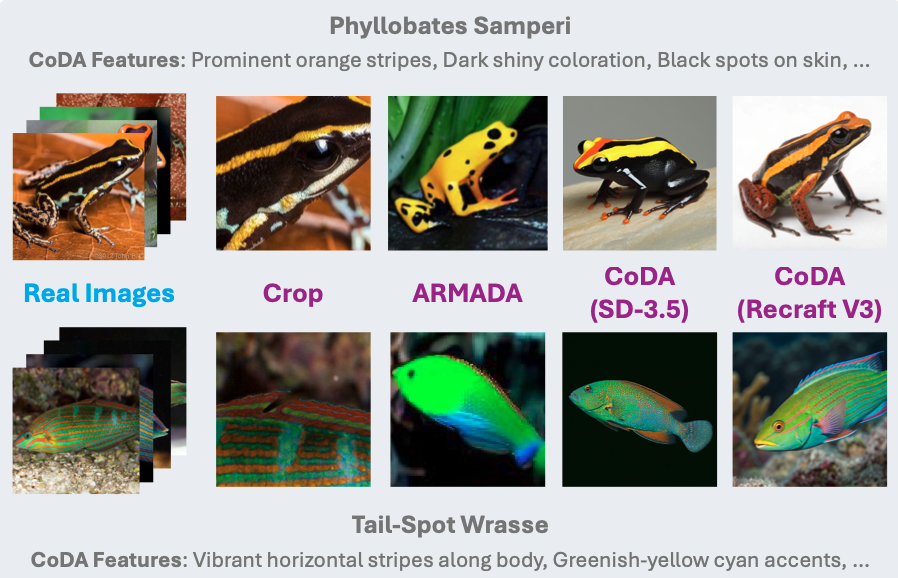

As shown in the figure, the CoDA method includes Feature Extraction, Feature Filtering, Feature-controlled Augmentation, and Augmented Image Filtering. The target concept and misidentified concept are highlighted respectively. Specific feature filtering scores are for illustration only. Here the example concepts Anodorhynchus Leari (Lear’s Macaw) and Cyanopsitta Spixii (Spix’s Macaw) are from the iNaturalist dataset, and augmented images are produced by the Recraft V3 model.

Phyllobates Samperi is a new species of hypertoxic poison dart frog first described in 2024, currently it does not have a common name, the concept is an example from the NovelSpecies datase. The image on the left is the original image of the frog, and the image on the right is the augmented image generated by CoDA and baselines.

@inproceedings{zhou2025contrastive,

title={Contrastive Visual Data Augmentation},

author={Zhou, Yu and Li, Bingxuan and Tang, Mohan and Jin, Xiaomeng and Wu, Te-Lin and Huang, Kuan-Hao and Ji, Heng and Chang, Kai-Wei and Peng, Nanyun},

booktitle={arXiv preprint arXiv:2502.17709},

year={2025}

}